Tracking and blocking of Bots and Crawlers

The Darkvisitors plugin now supports the new tracking feature. This way you can watch crawlers and bots on your site.

In recent days, I've noticed a surge in posts on my timeline complaining about bots and crawlers, wondering how to block them. A good opportunity to bring attention to the latest update of the Darkvisitors plugin.

I published this small plugin a few months ago. It delivers a robots.txt that can be used for various purposes:

- To populate a sitemap

- To grant or deny access to crawlers

- To deny access to AI crawlers/bots

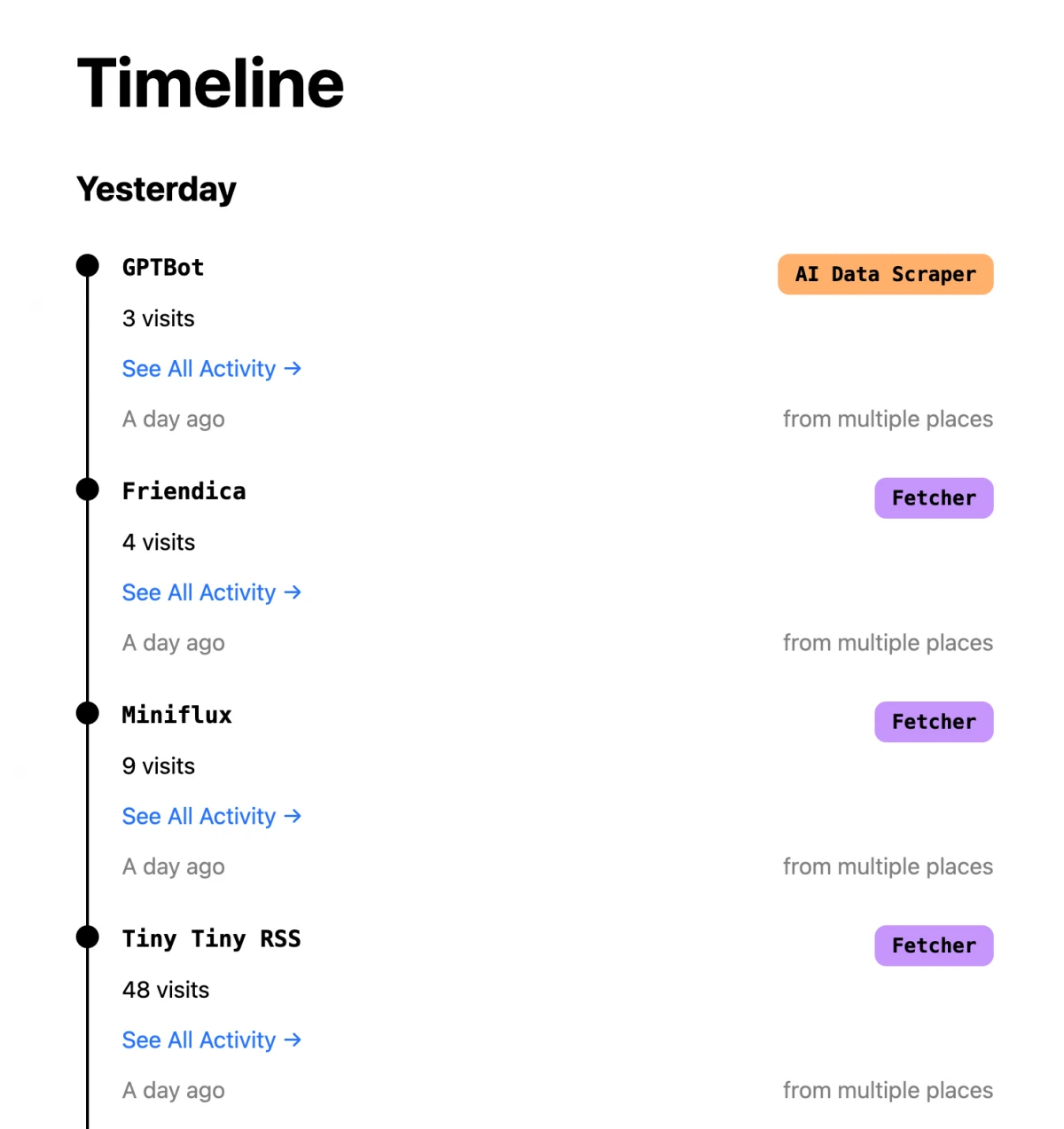

The main function of the plugin is to block AI crawlers, such as those that supply data to ChatGPT. It's possible to block different types of crawlers. For example, ChatGPT should not be allowed to access the site, but Perplexity can.

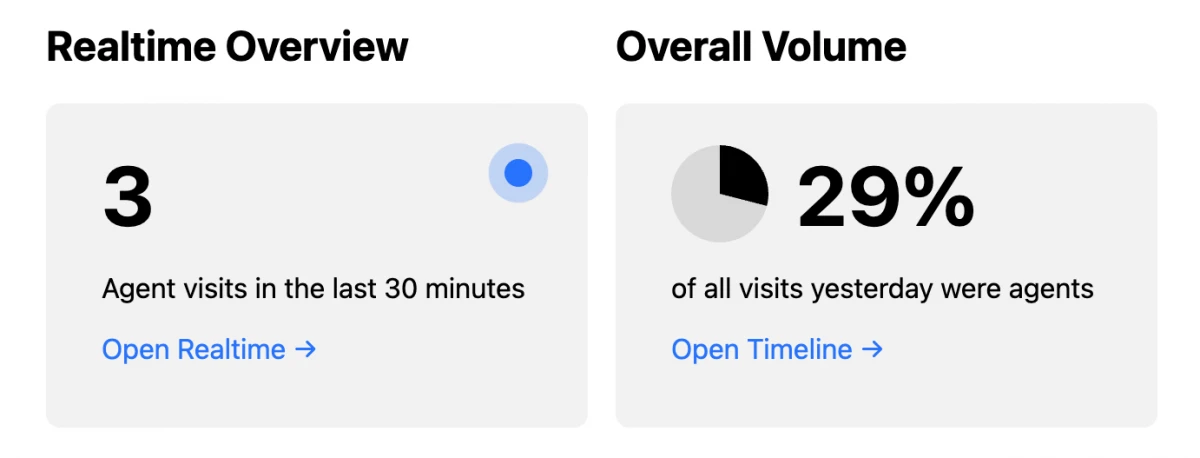

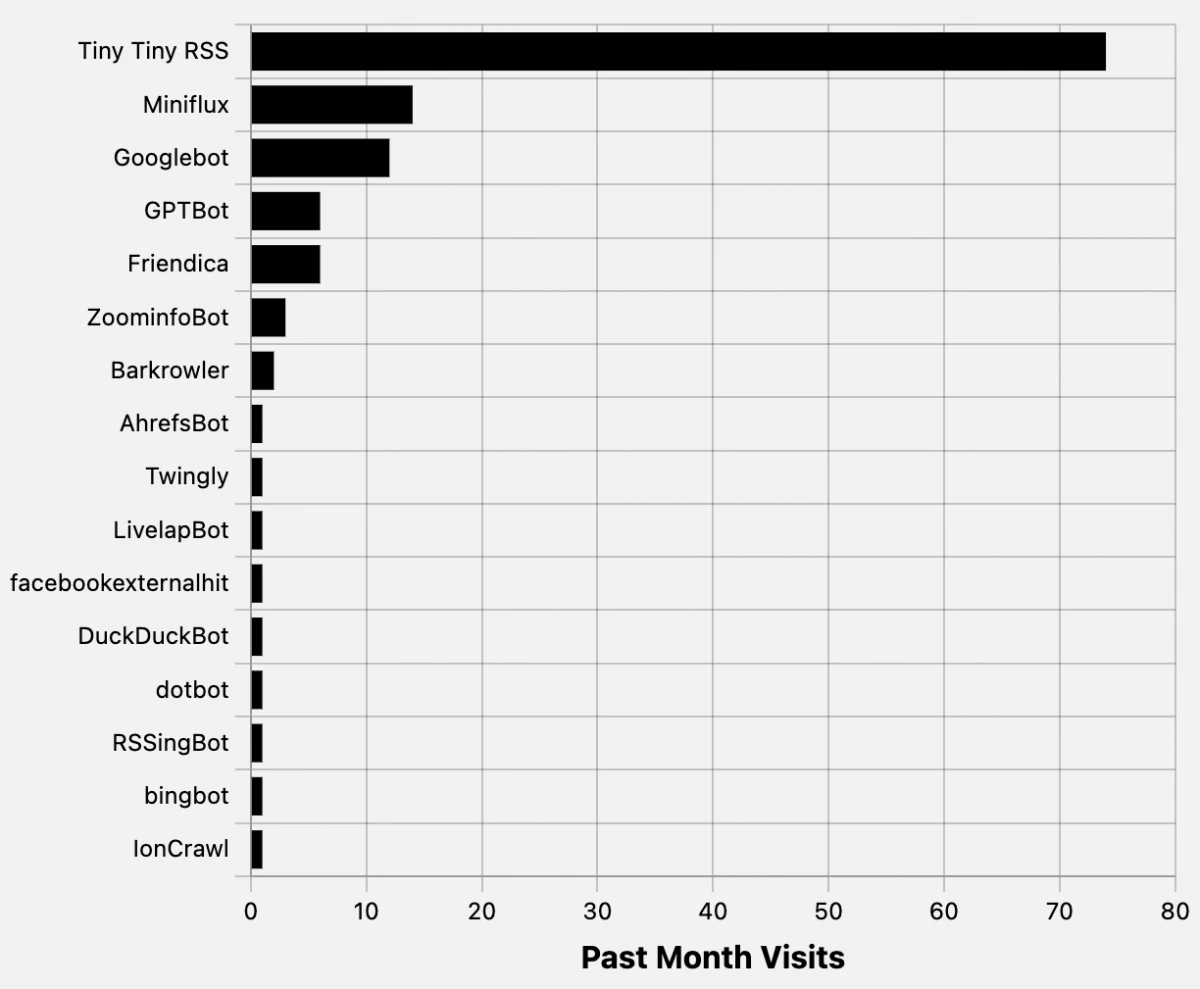

Until now, it was unclear which crawlers were actually accessing the site and what they were accessing. With the latest update of the plugin, you can now see this information. Darkvisitors.com is now offering an API that is free for a large number of accesses.

My Kirby plugin now supports this API and can send tracking calls if desired. This way, you can then see on the Darkvisitors site which crawlers, bots, etc. are accessing your site.

The following data will be sent via API:

- The path accessed

- The access type (GET, POST)

- The request headers

The plugin uses a route that always gets triggered and immediately passes to the next routes after an asynchronous tracking call. There should only be minimal delays. However, you should be aware that this happens with every page load.

Those who want to see which bots and crawlers are currently accessing their site and don't want to bother with logs or Webalizer can take a look at the new feature - it can be deactivated at any time. I find this very interesting and wonder what's going on behind the scenes.